Can I Afford It? Prompt Engineering Cost & Performance Tool

An end-to-end platform to benchmark and compare prompt engineering strategies across OpenAI and Anthropic models. Features a modern chat UI, real-time analytics, and a neural token estimator to forecast API costs.

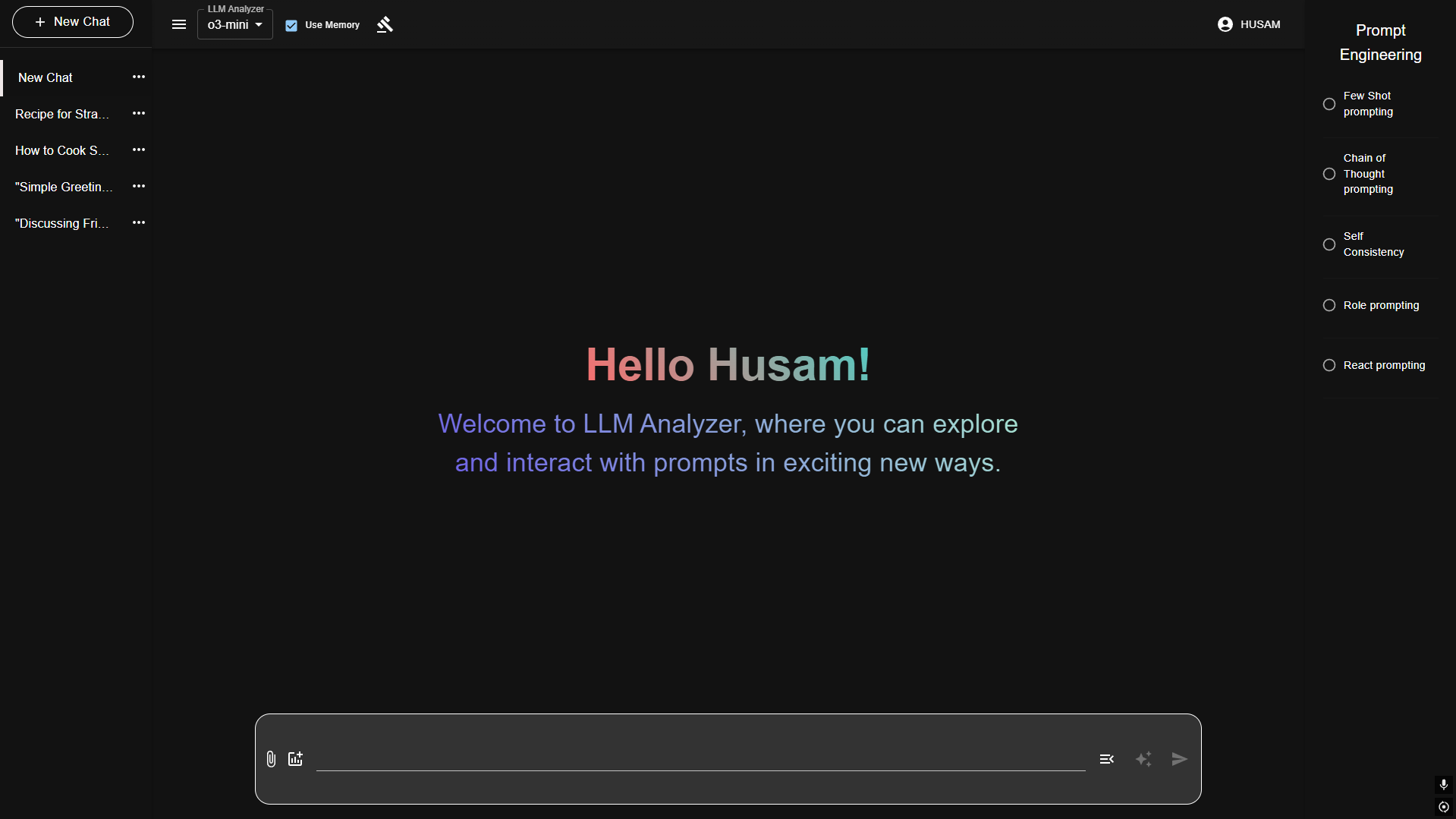

Personalized Welcome Screen: The home screen provides a personalized, intuitive chat experience. Users can upload files, select prompt types, and view real-time token counts. The interface adapts to the user, making prompt engineering and LLM experimentation seamless.

Prompt Input Bar: Easily compose prompts, upload files, and see a live token count. The AI assist button helps refine prompts for better results.

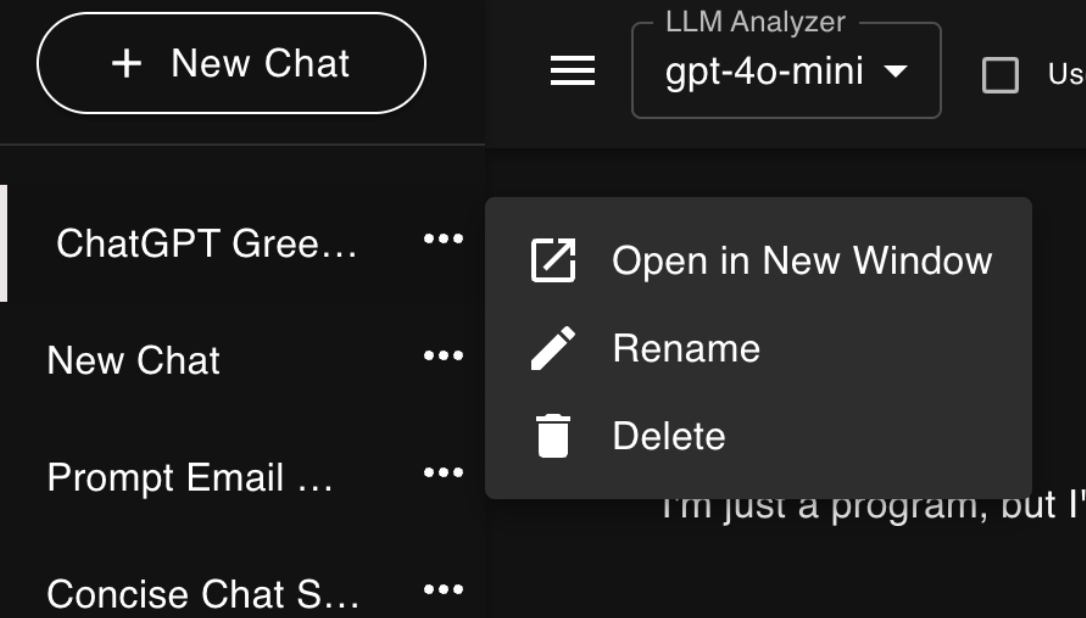

Chat List & Session Management: Manage multiple chat sessions, rename, open in new window, or delete for a clean workspace.

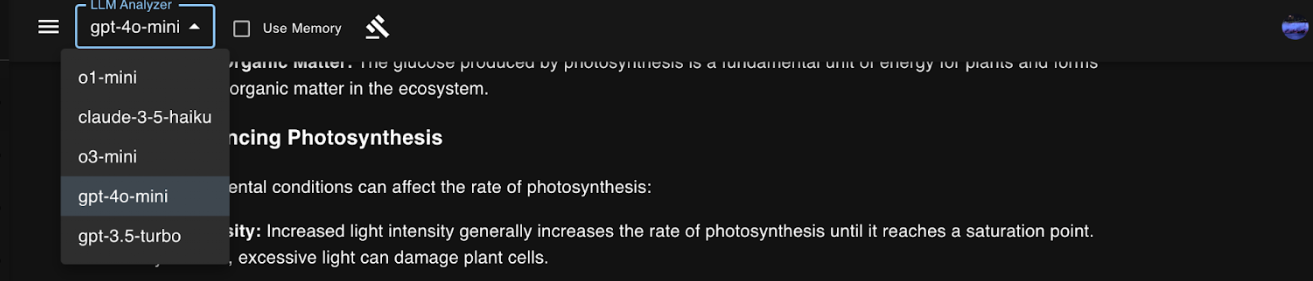

Model Selector & Judge: Switch between LLMs, toggle memory, and use the Judge feature for automatic scoring of responses.

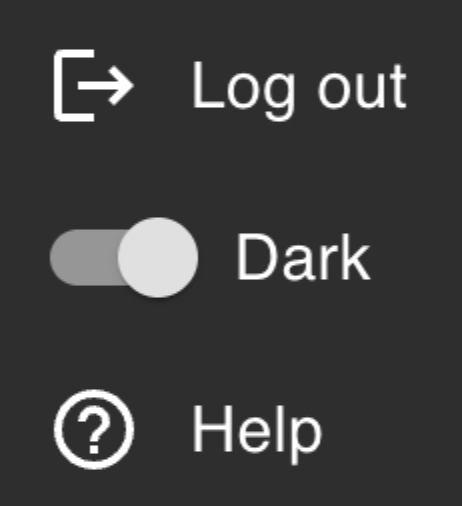

User Menu: Access logout, theme toggle, and help resources quickly from the user menu.

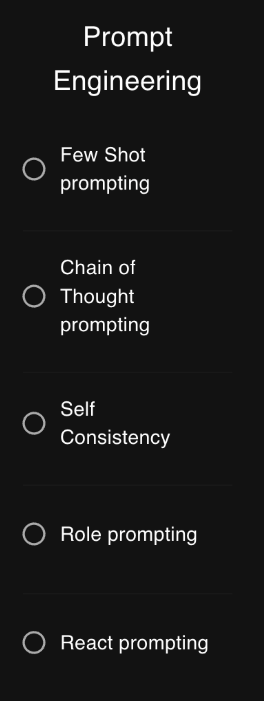

Prompt Engineering Drawer: Select advanced prompting strategies like Few Shot, Chain of Thought, and more.

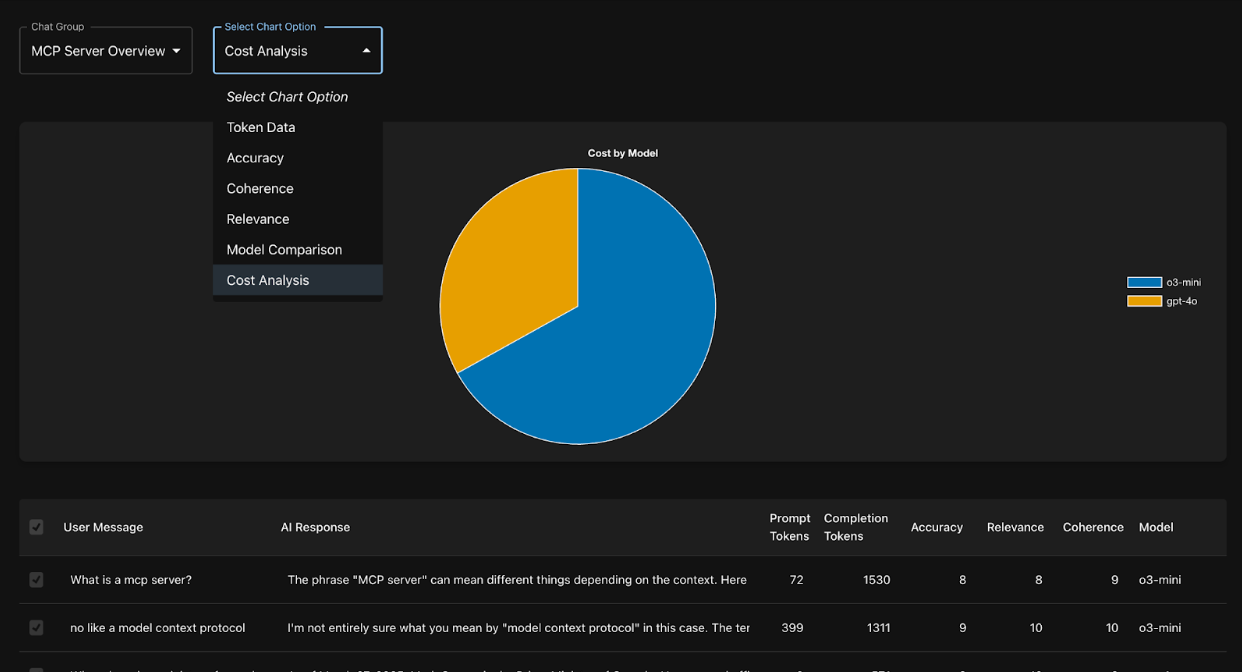

Analytics Dashboard: Visualize token usage, cost, accuracy, and more. Compare models and prompt strategies with real data.

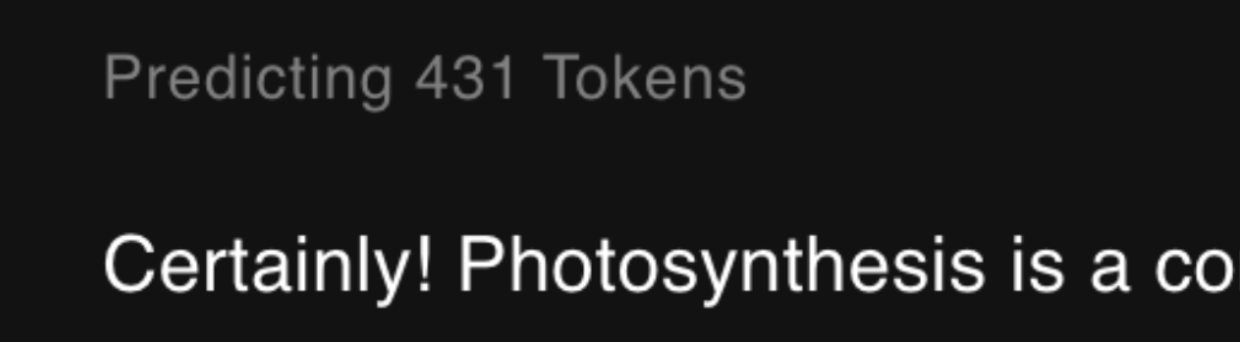

Token Prediction: Neural token estimator predicts completion tokens before model response, helping users anticipate cost and latency.

Libraries & Frameworks

| Category | Library/Framework | Purpose |

|---|---|---|

| Frontend | React.js | Core library for building the UI. |

| React Router | Manage protected and public routes based on user authentication state. | |

| Axios | Handle API calls for login, registration, and token validation. | |

| Material UI | Design and style the user interface. | |

| React Hook Form | Simplify form handling and validation for login and registration. | |

| Socket.io | Real-time communication for instant updates or notifications. | |

| EventSource | Real-time communication for instant updates or notifications (if applicable). | |

| Backend | Node.js | Runtime environment for server-side code. |

| Express.js | Create routes for authentication and other application logic. | |

| bcrypt | Hash and compare passwords securely. | |

| multer | Handle file uploads. | |

| socket.io | Enable real-time, bidirectional communication between client and server. | |

| google api | Authenticate Google users and interact with Google services (Mail, Drive, Search, Docs, etc.). | |

| CORS | Handle cross-origin requests securely. | |

| Database | MongoDB | Store user credentials and other data (like prompt history). |

| Mongoose | Simplify MongoDB interactions with a schema-based model. | |

| Visualization | Chart.js | Render token-cost graphs. |

| Figma (Design) | Create and refine the frontend design and wireframes. |

The project leverages a modern stack: React, Material UI, Chart.js for the frontend; Node.js, Express, and MongoDB for the backend; and PyTorch for token estimation. Real-time features are powered by Socket.io. This table summarizes the key libraries and their purposes.

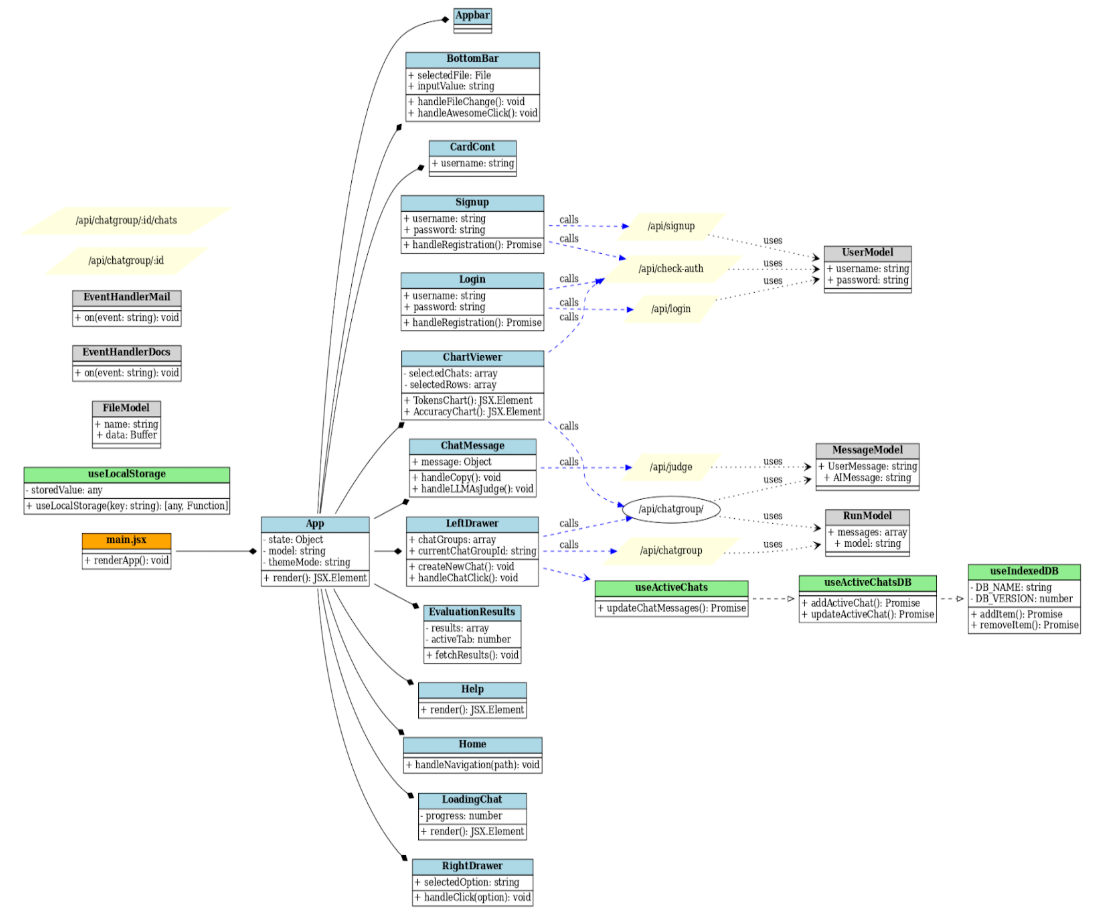

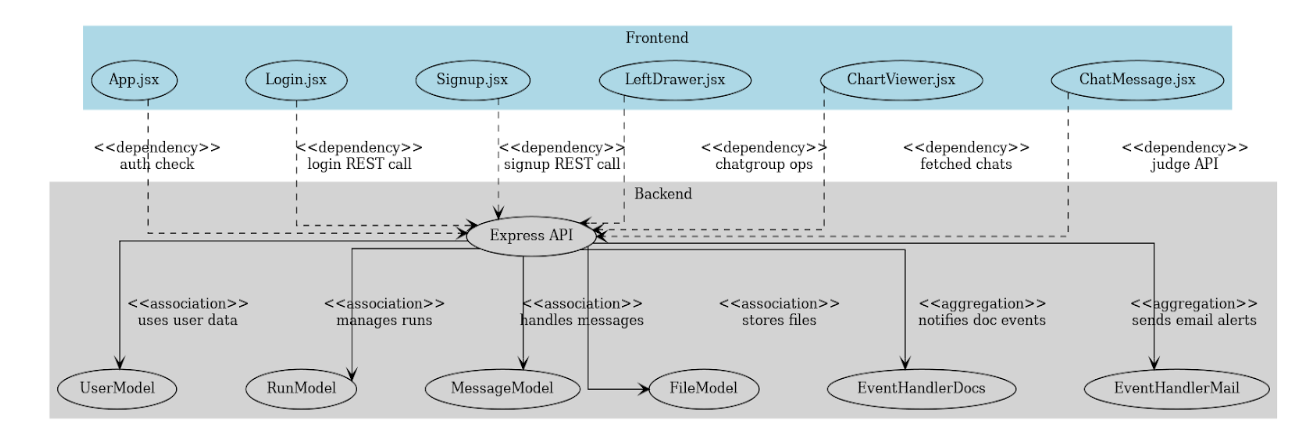

Class Diagram

The class diagram illustrates the structure and relationships between the main components, models, and utility functions in the LLM Analyzer application.

Component Diagram

The component diagram shows the interaction between frontend React components and backend Express APIs, highlighting the flow of data and responsibilities across the system.

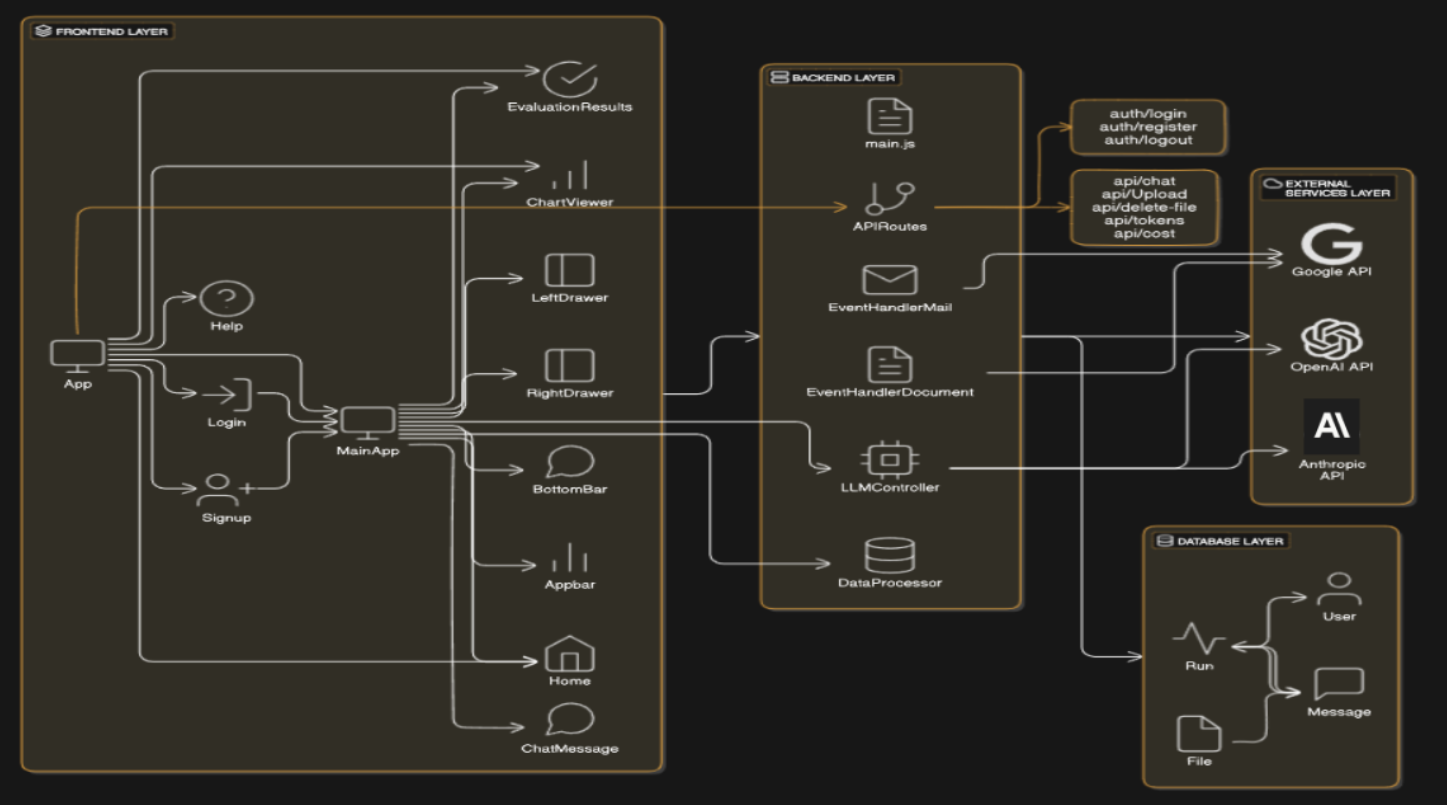

Architecture Diagram

The architecture diagram provides a high-level overview of the system, including the frontend, backend, database, and external service layers, and how they interact to deliver the application's features.

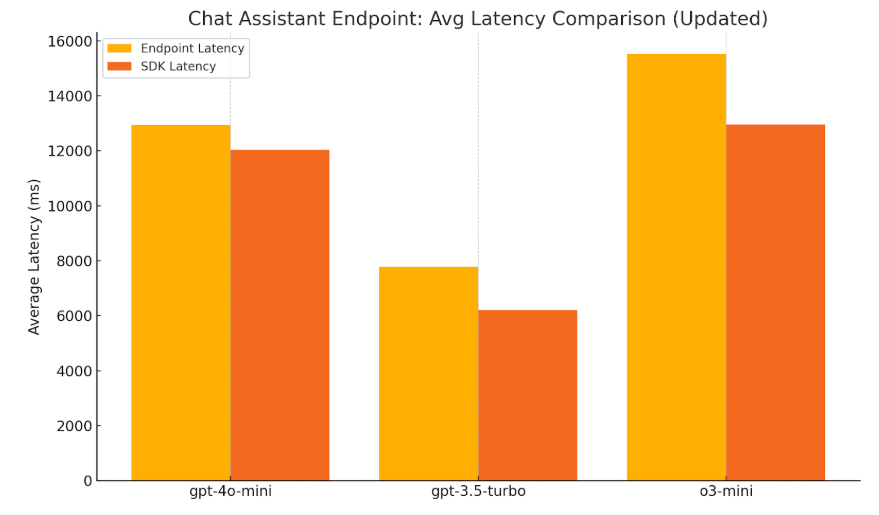

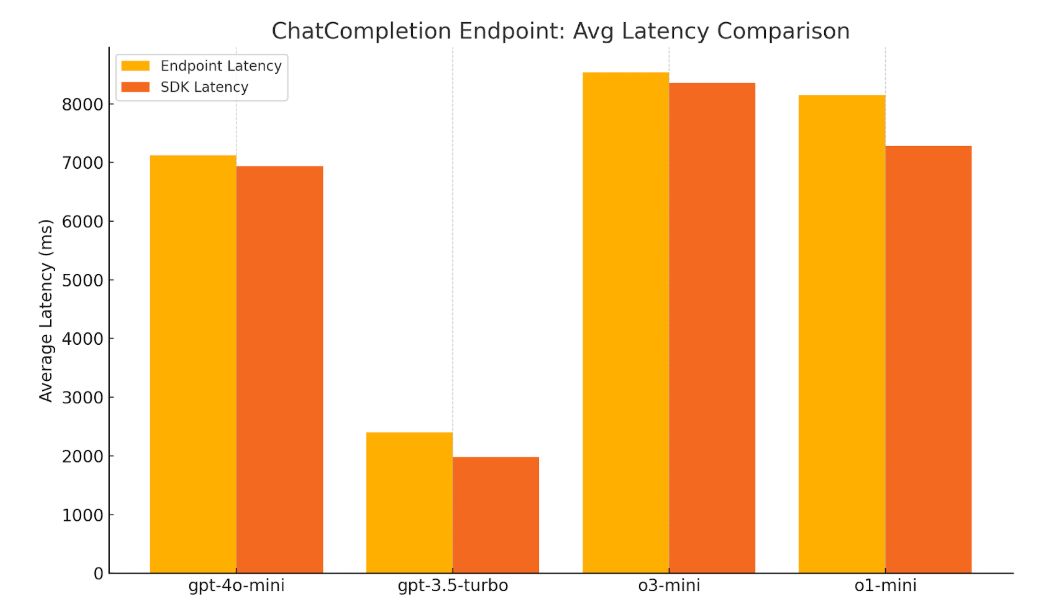

Performance Measurement Results

Performance Benchmark Test: The latency benchmarks compare the response times of our local API endpoints with the official OpenAI SDK, showing modest increases due to additional processing layers, but maintaining acceptable latency for real-time applications.

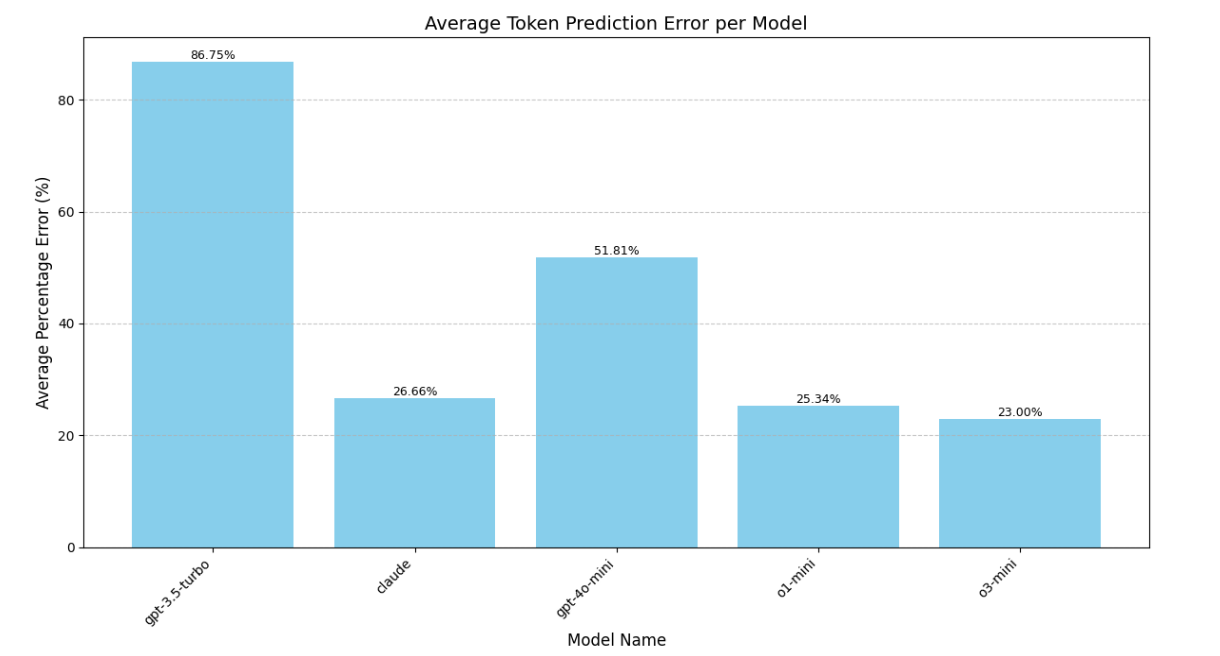

Token Estimation Accuracy Test: The token estimator achieves an average error of ~25% for most models, demonstrating strong performance for a lightweight model across a wide range of LLMs.

Key Features & Results

Key Features

- •Interactive chat with file upload, token counter, and prompt-type selector

- •Analytics dashboard: token usage, latency, BLEU/ROUGE/F1 scores, LLM-as-a-Judge ratings

- •Neural token-estimation model for forecasting API costs

- •Modular Node.js/Express backend with MongoDB for user sessions, messages, and files

Project Results

- ✓Sub-second overhead for custom APIs

- ✓Identified trade-offs between cost, speed, and accuracy

- ✓Token estimator validated with ~25% MSE on compact models

Quick Start & Configuration

AI Chat Application

Quick Start

git clone https://github.com/ArjunB3hl/prompt-app.git

cd prompt-app

npm installConfiguration

- Create

.envfile in root directory:OPENAI_API_KEY=your_openai_key ANTHROPIC_API_KEY=your_anthropic_key CLIENT_ID=your_google_client_id CLIENT_SECRET=your_google_client_secret REDIRECT_URI=http://localhost:5030/oauth2callback - MongoDB Setup

- MacOS:

brew tap mongodb/brew brew install mongodb-community brew services start mongodb-community - Windows:

- Download MongoDB Community Server from MongoDB Download Center

- Follow installation wizard

- Start MongoDB service

- MacOS:

- Google Cloud Setup

- Visit Google Cloud Console and create/select a project. Note your Project ID.

- Enable APIs in "APIs & Services" > "Library":

- Gmail API

- Google Drive API

- Google Docs API

- Configure OAuth consent screen and add scopes:

https://mail.google.com/ https://www.googleapis.com/auth/drive https://www.googleapis.com/auth/documents - Create OAuth 2.0 credentials (Web application):

- Add authorized origins:

http://localhost:5030 - Add authorized redirect URI:

http://localhost:5030/oauth2callback

- Add authorized origins:

Features

- Prompting Techniques: Few Shot, Chain of Thought, Self Consistency, Role Playing, React Prompting

- Token Analytics: Real-time token usage tracking, visualization, and historical analysis

- Google Integration: Email, document, and drive file management

Development

npm run devContributing

- Fork the repository

- Create feature branch:

git checkout -b feature-name - Commit changes:

git commit -am 'Add feature' - Push branch:

git push origin feature-name - Submit pull request